Creating Software Without Writing Code: Testing AI “Vibe Coding” Tools

Can you build a real, functional web app with nothing but natural language prompts?

We recently put vibe coding to the test at CodeYam, exploring AI tools that turn natural language into working software. These tools are becoming increasingly sophisticated, but how well do they perform when asked to build a moderately complex application and not just a pretty static landing page?

We tested six tools:

Bolt by StackBlitz

Firebase Studio (formerly IDX)

Base44 (just acquired by Wix)

Each offered natural language input and code output with a UI preview.

We also considered GitHub Spark (demo’d at GitHub Universe ‘24), but it’s in limited preview and wasn’t testable.

The Task: Build a Real App

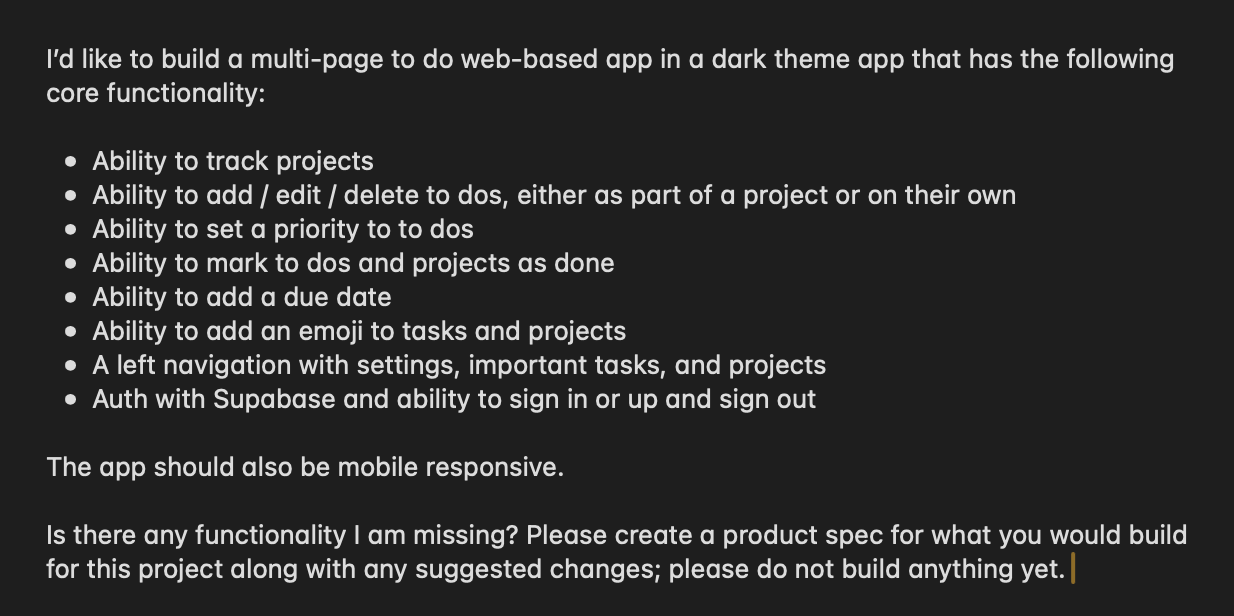

The assignment was straightforward but ambitious: create a multi-page, mobile-responsive, to-do app in a dark theme with a number of high-level features explicitly called out.

Also ask: “What functionality is missing? Generate a product spec.” as the first step.

We then prompted the tools to build.

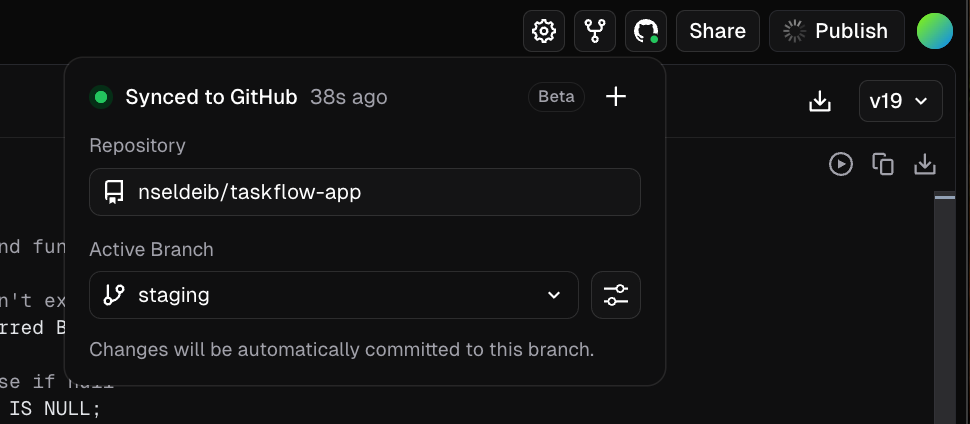

Results: From Prompt to Spec

The tools fell into three broad behavior categories:

Detailed planners: v0, Lovable, Base44, and Bolt produced multi-page specs.

Lightweight planners: Firebase Studio gave a sparse outline.

Opaque agents: Replit and Figma Make didn’t share much about their reasoning.

Despite their varied responses to the initial prompt, all tools attempted to build something close to the original vision. Some had a single-click “build” or “prototype” button. Others waited for a natural language command like “proceed.”

The most surprising reaction was Figma Make, which didn’t generate a product spec but did generate a skeleton architecture, complete with a “Hello, World” message.

One other quirky behavior observed is that development estimates (when provided) were usually for human developers in weeks, even if the input specified that the vibe coding tool would be used exclusively.

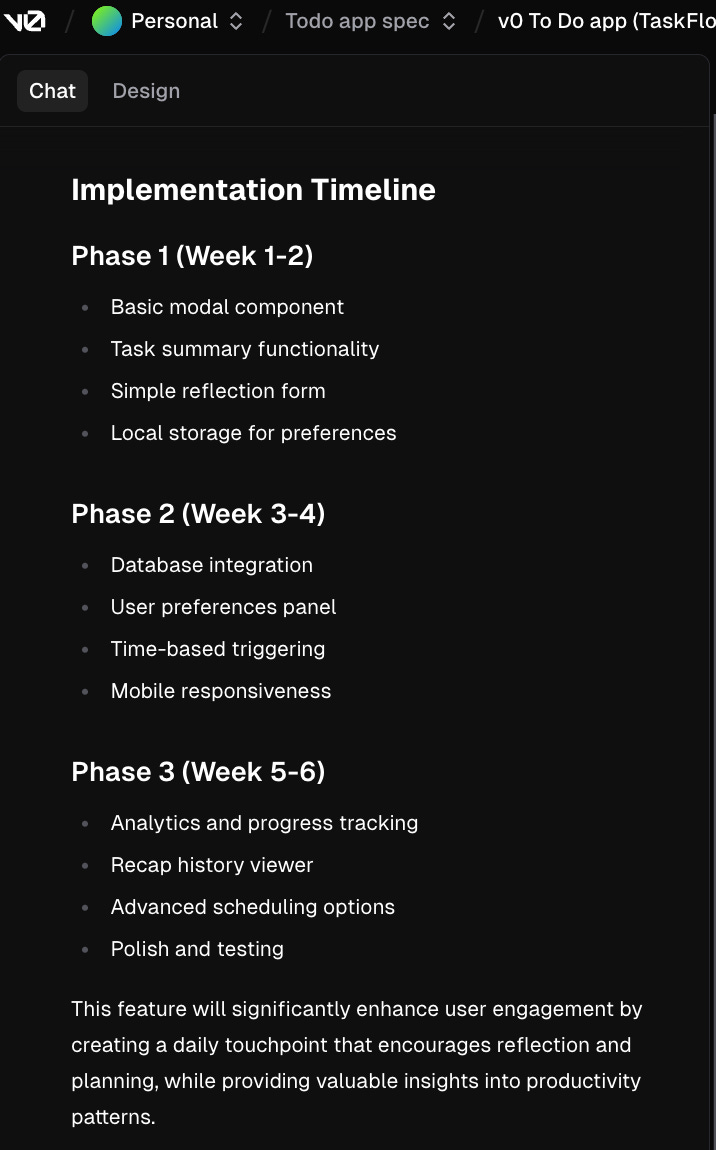

For instance, v0 gave this timeline when prompted to spec out a new to-do app feature:

Finally, despite not specifying a specific language or framework to use in the initial prompt, all of these tools defaulted to TypeScript and frameworks like Next.js. This made sense for the project at hand, though only certain tools shared this decision or their reasoning. Firebase Studio was a positive example here:

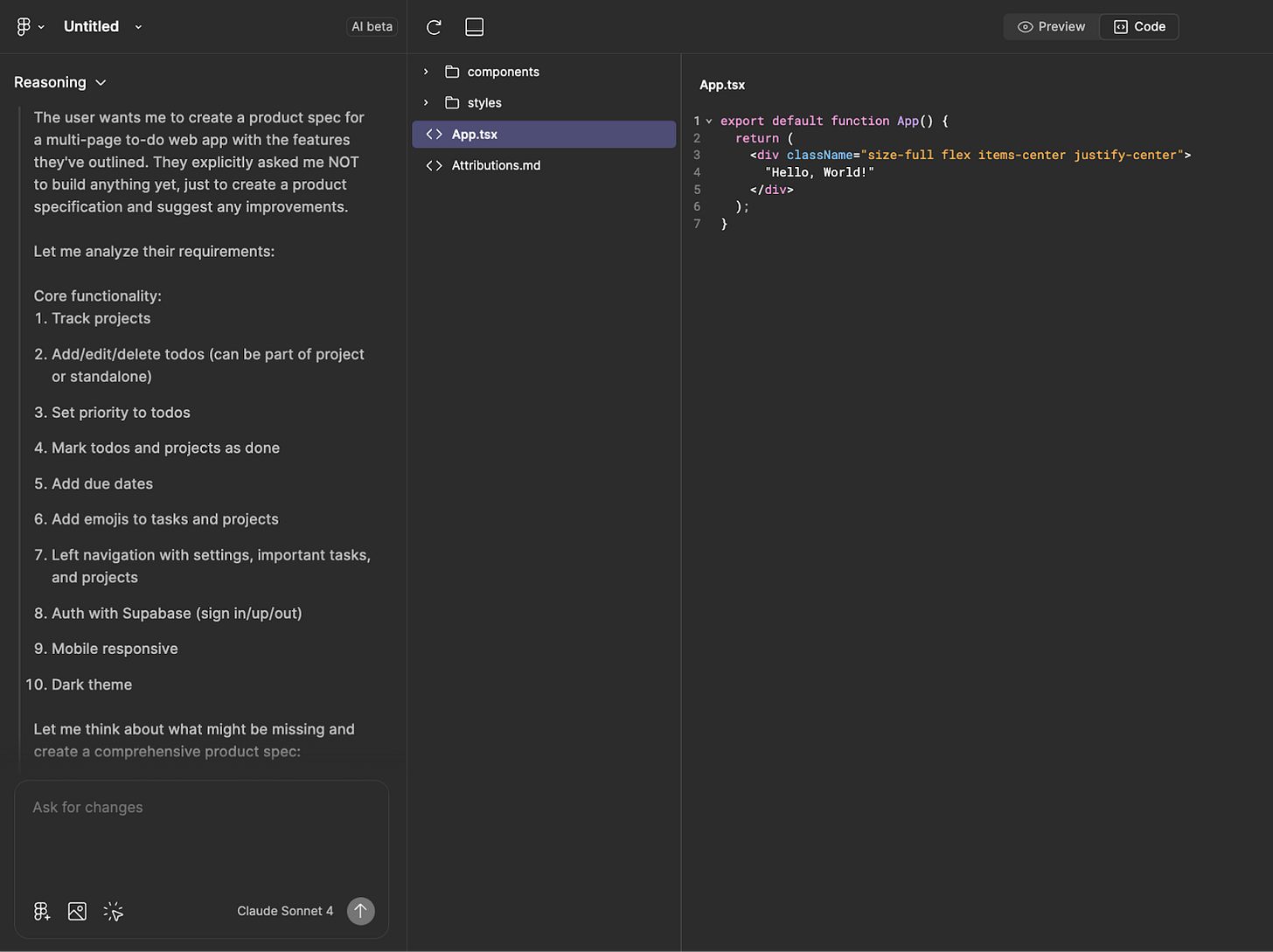

Fast ≠ Flawless

Most tools tried to generate full MVPs in a single shot. While impressive, this was not always reliable. Common issues included:

Random added features

Missing or broken functionality

Unpolished UI/UX

Misinterpreted design preferences

Iterative workflows are more token-efficient and tend to deliver better results. The push toward one-shot builds still needs refinement. That said, there were hints of this; e.g. certain tools flagging if a prompt might require a lot of tokens, or others suggesting a sequence of tasks instead of doing them all at once. One future test we may try is to call out efficiency more explicitly as a focus, however that was not a part of this experiment.

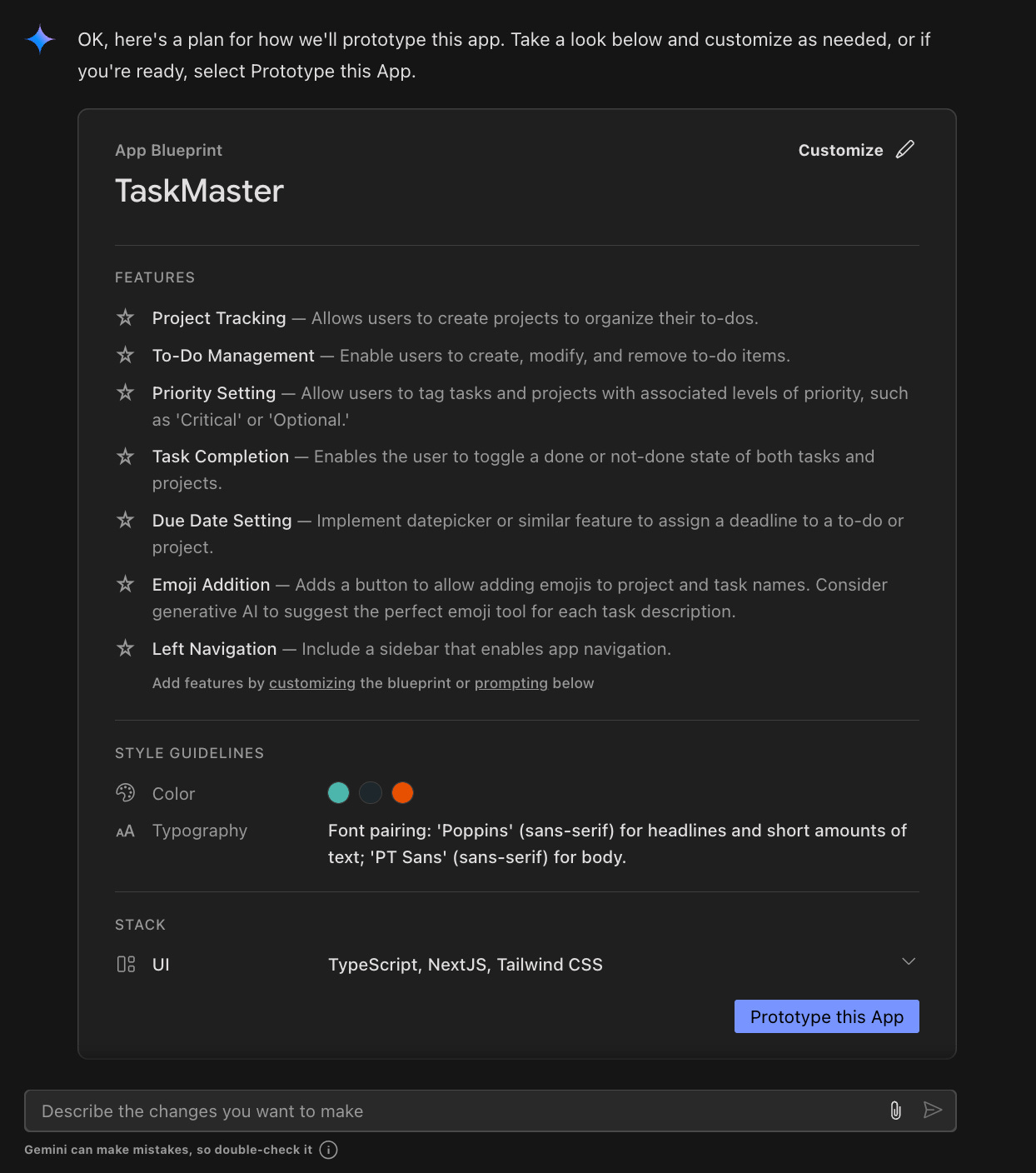

Database Integration: Supabase as Both a Benefit and Bottleneck

Supabase is the default backend for most of these tools, but support for connecting accounts varies:

v0: Easy to create a free Supabase project in a new account, but hard to add or switch to an existing org/project later. Required bouncing between dashboards (v0’s, Vercel’s, Supabase’s) and dealing with conflicting UI states.

Lovable and Bolt.new: Only allow granting full access to all org projects, not something we were willing to do for our startup’s Supabase account. Too blunt for easy experimentation.

Replit: Manual copy/paste of Supabase keys. Clunky, but effective.

Firebase Studio: No support. Recommends manual editing of placeholder files and warns against “insecure” alternate approaches.

Base44: No support. They have their own authentication available.

Takeaway: most tools assume either a brand new user or an experienced engineer; few support the middle ground (e.g. someone testing personally, then connecting to a company org).

That’s a missed opportunity as people look to adopt these tools professionally, beyond hobbyist levels, either in their daily work or in new professional side projects.

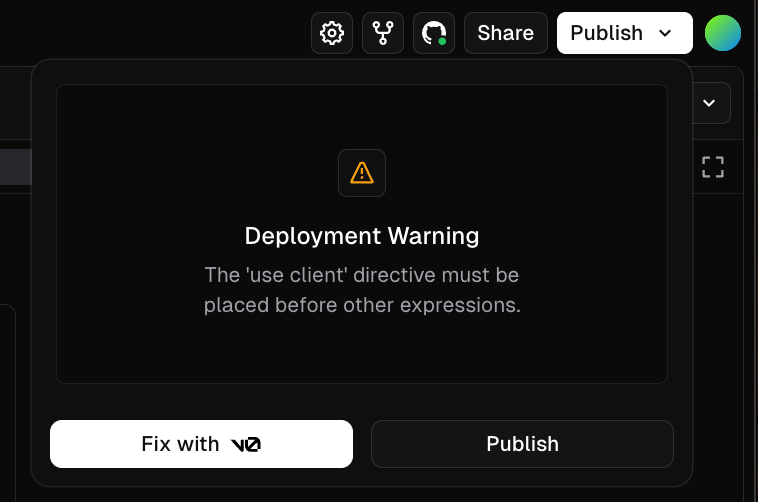

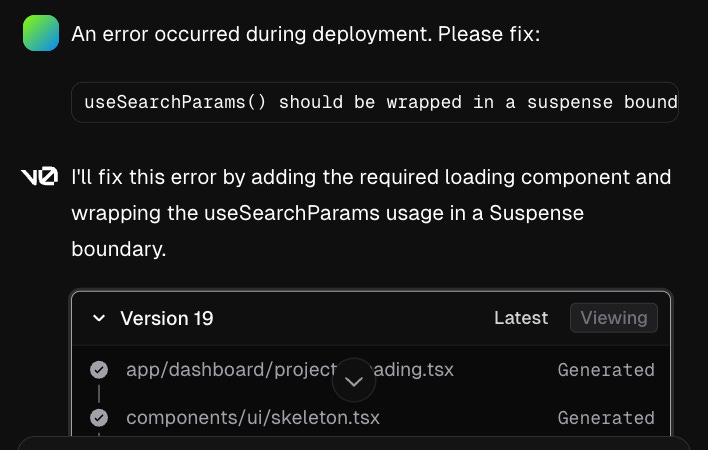

Automated Error Management

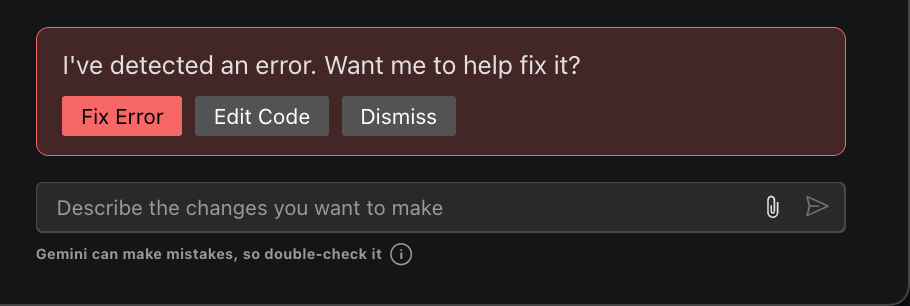

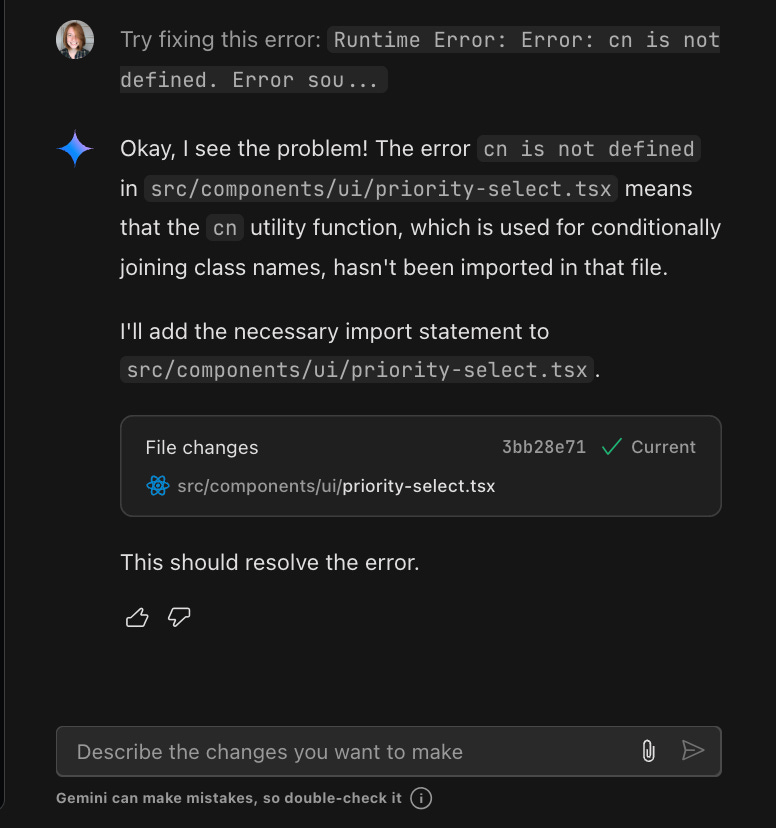

Most of the tools tried offered variations of a “fix it” button for issues automatically detected; in general, this is a great idea.

However, some of these issues were irrelevant to the project being worked on and recurring. For instance, v0 repeatedly surfaced this MetaMask bug that’s not relevant, and there is not a way to dismiss once and for all:

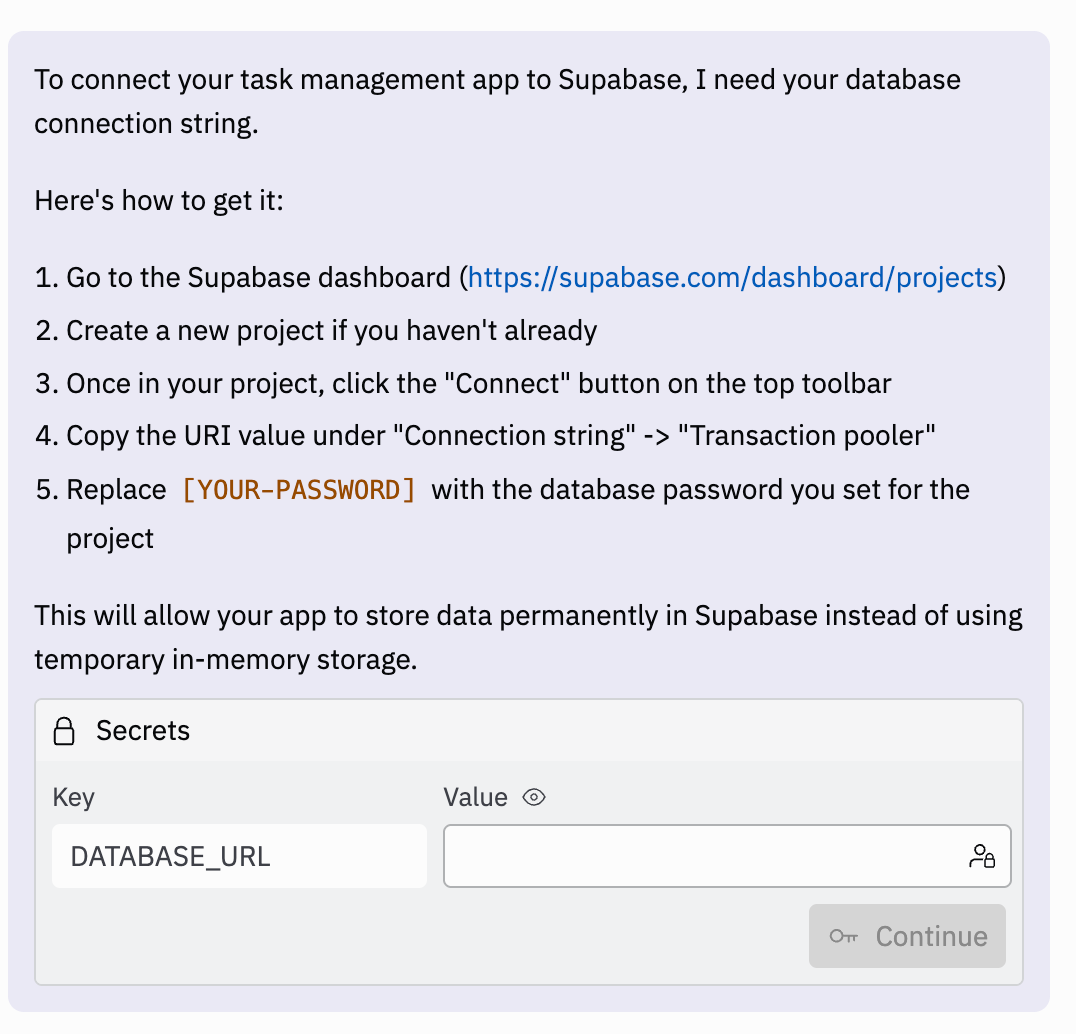

One Name, Many Clones

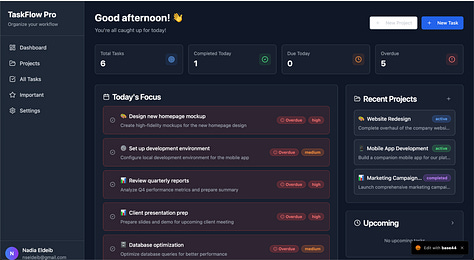

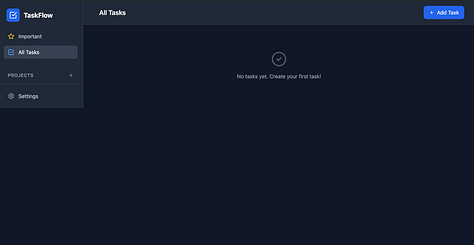

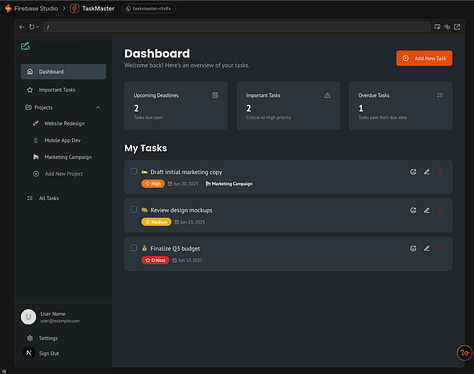

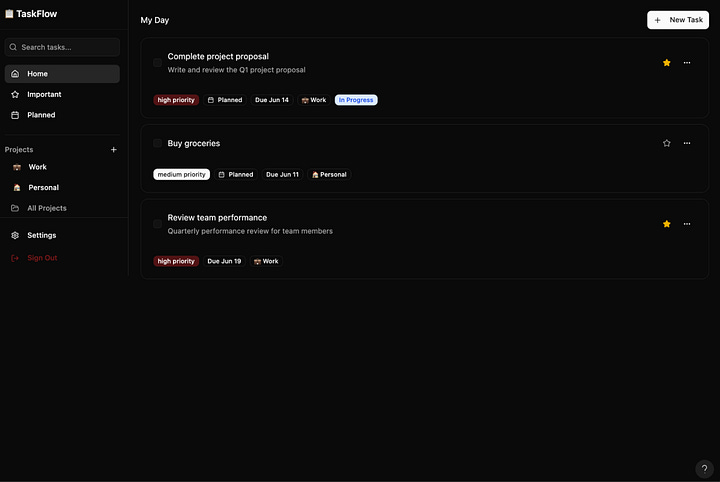

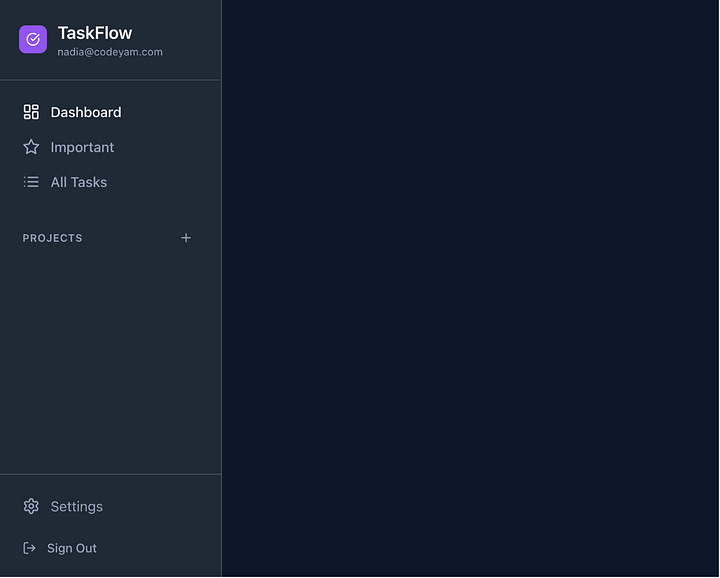

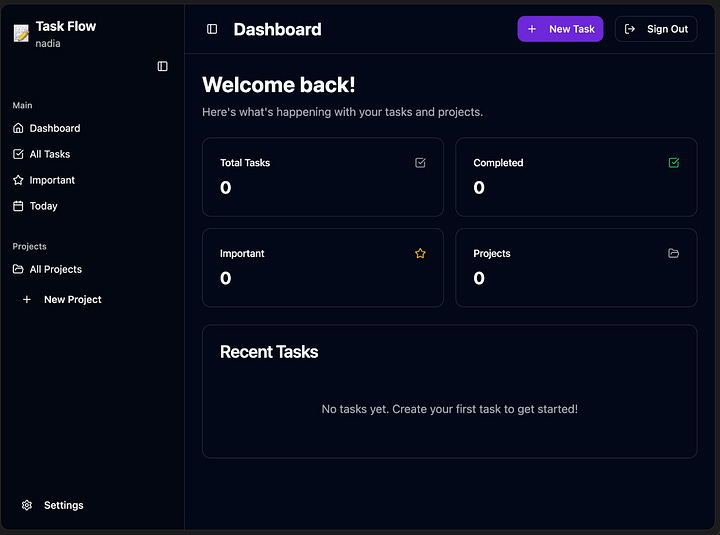

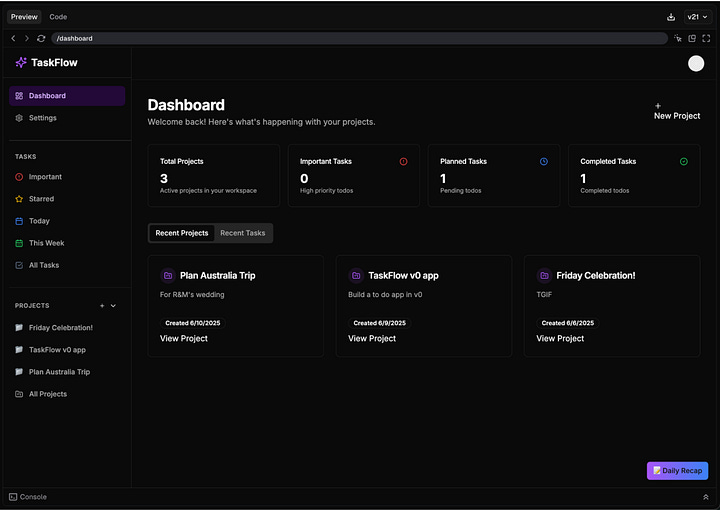

Most tools returned apps with nearly identical names (TaskFlow, TaskFlow Pro, TaskMaster, etc.) despite no branding prompt. If your intent is to build something unique, plan to explicitly prompt to change the name and branding.

That said, despite the same prompts, each interface had slight differences. Compare / contrast:

Branding can also be changed later, often with help from Claude or ChatGPT. You can use those tools to prompt for color schemes, fonts, then pick a winner and suggest it to the vibe coding tool, leading to a polished overall look-and-feel post-build. This was also surprisingly fun!

QA Is Critical

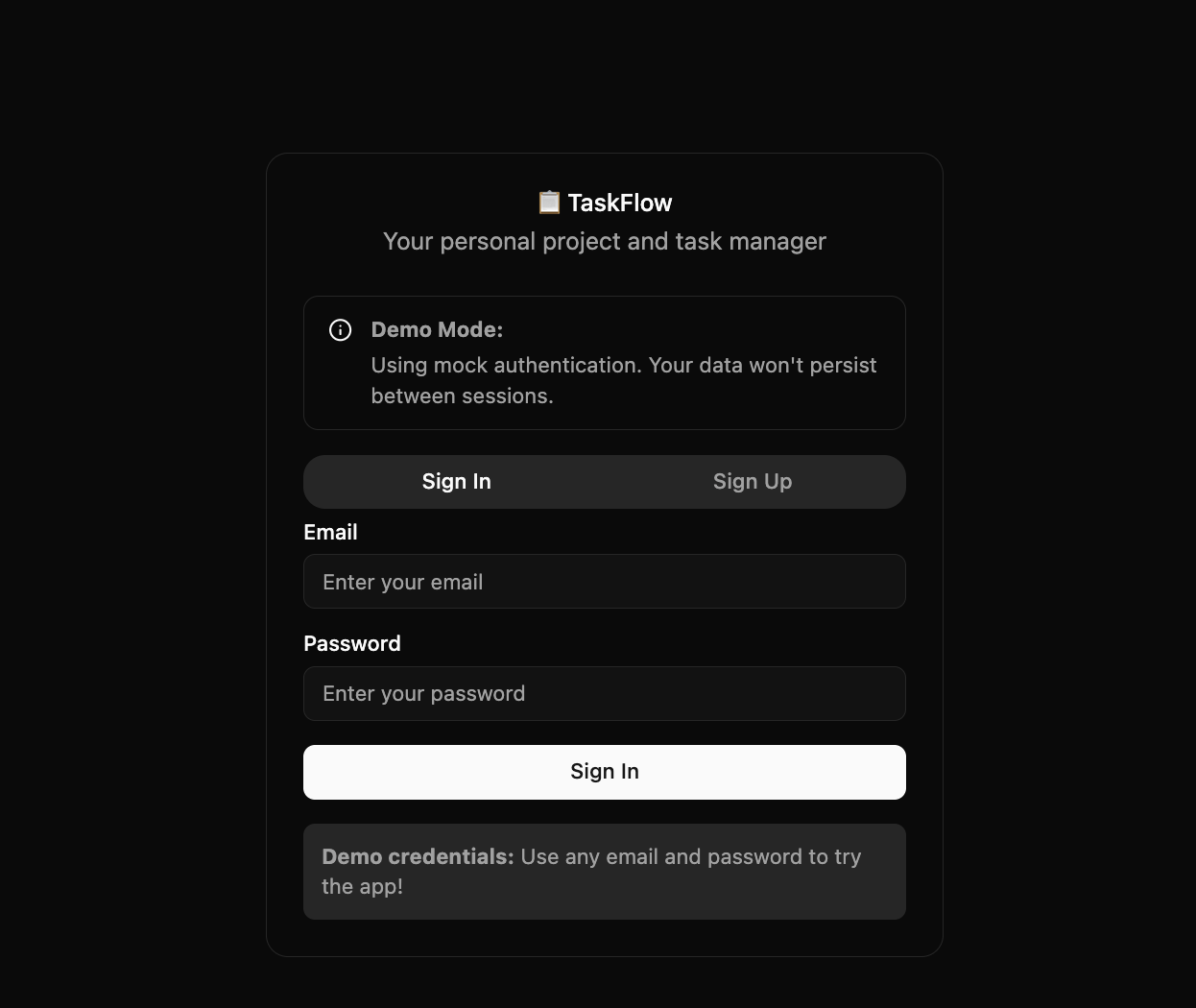

One major learning: QA is critical. You can’t assume what you’re seeing in the preview environment matches what you’ll see when you deploy; I had some wild surprises, e.g. Lovable creating a totally different landing and auth page than what I’d seen in the preview when I first deployed (one that didn’t work at all).

While some tools did show pre-filled data to help give you a sense of the app, others maintained the null state (e.g. as a new user of a to-do app, you’d have no projects or tasks). Ultimately, viewing both states is helpful.

An area many of these tools could improve is facilitating easier manual testing; while there are some benefits like offering the ability to navigate based on the page (“/dashboard” vs. “/login” for example), in most cases I’d need to manually create a real user and use that to sign in and test.

Figma Make was a positive notable exception here, facilitating easier testing through dummy data and users (reminiscent of Stripe facilitating easier payment testing with their 4242 test card number).

In some tools, private keys and other sensitive data may inadvertently appear, either in your code and/or the chat history, which is visible if made public (e.g. this Reddit and this LinkedIn thread). For free users of Lovable, Replit, and Base44, note that projects are public by default. Always check.

In general, these tools require significant oversight and, if you’re not familiar with writing tests or manually QAing software, you’re likely to miss bugs and issues. That’s fine for side projects or prototypes, but for pushing meaningful software to production you need to be more careful.

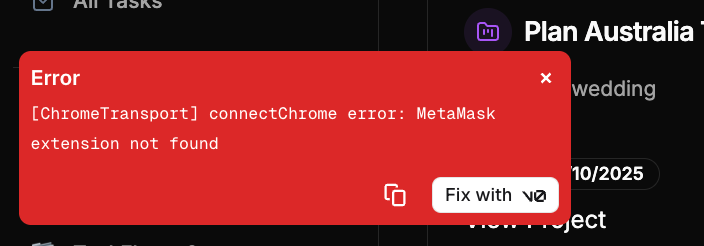

Deployment Hurdles

Most tools offer GitHub integration or live deployment. Notable exceptions: Replit, Firebase Studio, and Base44.

Replit paywalls production deploys behind a subscription. Firebase Studio requires you to link a Google Cloud Billing account before you can proceed. Base44 requires you to upgrade to a paid plan to be able to export to GitHub.

Understandable? Sure. But this approach creates a wall for learners and slows experimentation. We’d love to see monetization models that charge for value, not for basic feedback loops.

v0 had one of the smoothest deployment flows (through Vercel, of course). There’s also a new sync to GitHub (Beta) functionality that was initially buggy (it was not clear when changes were automatically committed, and if committing directly to main there were extra quirks) but seems promising.

Takeaways

Yes, you can build multi-page apps using only natural language.

But results are mixed: tool maturity and UX vary widely.

Supabase (database) integration is an important friction point that has the potential to be better integrated.

Iteration outperforms one-shot builds in quality and clarity so far.

Branding and QA still matter. Don’t skip them.

Tooling needs to better support users who sit between "beginner" and "expert” developer.

You can see the results, including UI previews and the full chat history, here:

v0 by Vercel (and on GitHub)

Firebase Studio (formerly IDX)

Right now, we’re using v0 under a paid plan, though Supabase connection issues may drive us to explore other options. We also have access to Figma Make (Beta) via our paid Figma plan, however this tool (which to be fair, is in beta) seems at an earlier stage than the others we tried. Every tool tested shows promise and all of them are evolving rapidly.

An important caveat: this experiment relied on natural language prompts to interact with the vibe coding agents and tools; some have other features to interact through visuals, drawing, selecting elements, and other methods that looked interesting but were not tested at this time.

Final Thoughts

Vibe coding is powerful. Fast prototyping plus AI-native feedback loops (like what we’re building at CodeYam) can dramatically reshape how software gets made.

But today’s tools still need refinement, especially around integrations, iteration, and user flexibility. We’ll continue exploring the space and building tools that bridge these gaps.

If you’ve had similar experiences with these tools (or others), we’d love to compare notes. Feel free to reply or reach out at hello@codeyam.com.